News - Worldcoin: this is how ChatGPT creator aims to make crypto suitable for the masses before the end of 2023

By

Worldcoin: this is how ChatGPT creator aims to make crypto suitable for the masses before the end of 2023

Storing digital IDs, making crypto payments and an unconditional basic income: Worldcoin wants to combine all this and is set to launch its app soon. Progressive, but there is also criticism.

The vision sounds wild. An app for everything. In the future, it will allow you to pay for free with crypto, prove your identity in a tamper-proof way and even receive an unconditional basic income. Worldcoin wants to make this possible. Perhaps one of the most ambitious crypto ecosystems in recent years. Behind it is Sam Altman, the founder and CEO of ChatGPT. The AI is the fastest-growing Internet service of all time. High-profile investors are also backing Worldcoin, including Andressen Horrowitz and Coinbase.

The thesis behind the project: in a few years, automation and artificial intelligence will completely change the economy. From medicine to journalism: in many industries, humans will increasingly be replaced by intelligent machines. Experts have been predicting this for years. Up to 80 percent of jobs could be lost. On the other hand, according to Altman, AI will lead to high productivity. The foundation behind Worldcoin speaks of an "era of abundance." Their concern: distributing it back to people, through their system. What governments have been talking about for years, Sam Altman wants to introduce via crypto: An unconditional basic income.

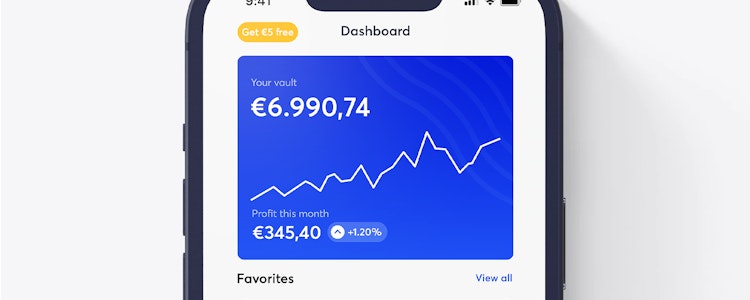

Worldcoin is built on Optimism, a Layer2 solution for Ethereum. The accompanying World app promises fee-free transactions with FIAT or crypto. It also appears to have integrated the Ethereum Name Service. This means it is open to dApps from the ecosystem. The code reportedly remains open source. Founded in 2019, the project has been in a testing phase since 2022 and plans to launch in the summer of 2023. 80 percent of the tokens will be distributed to the community, while the team and investors will each keep 10 percent for themselves. But there is a big catch.

Iris scan required

Those who want to participate in the utopian project must have their irises scanned by a machine called Orb. These will be placed at universities and supermarkets to verify that a person is real and unique. Eyes will become a sign of humanity in an AI-dominated future. "Since no two people have the same iris pattern and these patterns are very difficult to fake, the Orb can accurately distinguish people from each other without having to gather any other information about them - not even their names," Worldcoin said.

The data is used to generate an "IrisHash" that is stored locally in the Orb. According to Worldcoin, the code is never shared, only verified that the hash already exists in the database. To do this, the company says it uses a new cryptographic method to protect privacy, its "zero-knowledge proof." If the algorithms finds no match, the person has passed the uniqueness check and can continue the registration process with an e-mail address, a phone number or a QR code to set up their Worldcoin wallet. All of this should happen in seconds.

Worldcoin thus solves two other problems simultaneously: It clearly distinguishes humans from machines, which will become increasingly important in the digital space. And it guarantees more privacy than current solutions. Because when the system becomes established, you will no longer have to hand over your data everywhere, only the "irishash."

1/ A brief look inside the Orb ⚪️👀🧵 pic.twitter.com/VnM2QGfaaQ

— Worldcoin (@worldcoin) May 18, 2023

Worldcoin would have already broken laws

At least in theory. Because according to the current terms, users' data are indeed stored, provided they give permission. That will soon no longer be necessary, the company claims. The AI for iris recognition would first have to be trained well enough. But also a rapport from the MIT Technology Review raises questions. Worldcoin allegedly recruited its first half-million users, mainly in emerging countries, using questionable methods.

Company representatives allegedly "collected more personal data than they admitted." They also "failed to obtain meaningful consent" from their test users. Instead, they allegedly lured and deceived poor people with promises of wealth after they passed on their biometric data. MIT says: Worldcoin may have even violated applicable data protection laws.

The story around OpenAI should also be wary. The leading AI company began with great ideals as a nonprofit. Not much of that has remained. Since the billion-dollar deal with Microsoft, the emphasis has been on hyper-commercializing the software, starting with the sale of GPT licenses and premium access.